Is your research multi-modal?

Most AI assistants today are fluent readers of the internet. But ask them to listen—to parse tone, story, or off-script insight—and they’re nearly deaf.

That’s not a metaphor. A 2025 study by Profound analyzing 30 million citations across ChatGPT, Google AI Overviews, and Perplexity found that these systems overwhelmingly favor text-based sources—especially Wikipedia and Reddit.

| Platform | #1 Cited Source | % of Top 10 Citations | Core Signal |

|---|---|---|---|

| ChatGPT | Wikipedia | 47.9% | Heavily centralized, encyclopedic sourcing |

| Google AI Overviews | 21.0% | Skews toward community chatter and YouTube | |

| Perplexity | 46.7% | Community-first with minimal editorial vetting |

🔗 Source: Profound’s 2025 report on AI platform citation patterns

The implication? Today’s most popular AI tools are trained to read the web, not listen to the world. If an idea hasn’t been written, indexed, and formatted into a webpage—good luck surfacing it.

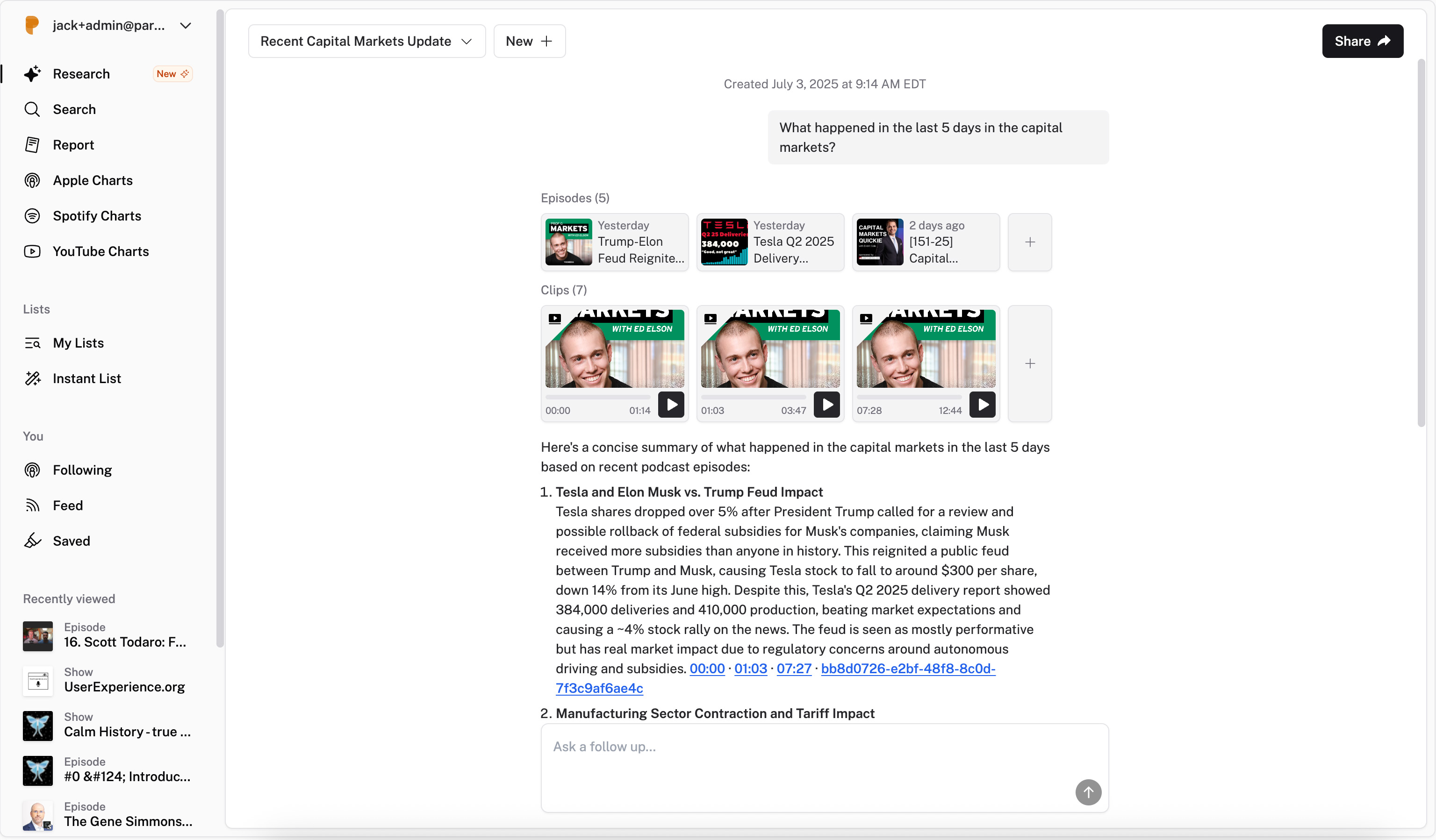

Parla Unlocks What You Can’t Read

Parla was built for a different kind of research stack—one that listens, watches, and understands. It surfaces insights from 25 million hours of audio and video content, across every active podcast and thousands of longform sources that don’t show up in text search.

That means you get:

-

First-person insights from founders, researchers, policymakers, and creators

-

Richer context through voice, pacing, hesitation, and emphasis

-

Cited, timestamped media so you can verify and explore further

-

Realtime access to the content that never hits Wikipedia or Reddit

This isn’t just a different dataset—it’s a different kind of intelligence.

When Audio/Video Beats Text

| Task | Text-Only AI Assistants | Parla (Audio/Video Native) |

|---|---|---|

| Market & competitive research | Based on press releases & wikis | Real interviews, events, and panels |

| Thought leadership prep | Summary-level context | Deep cuts from past talks, appearances |

| Qualitative insights | Thin quotes or speculation | Authentic, in-context voice clips |

| Briefing execs or clients | Skims headlines | Delivers nuance + actual clips |

The Future of Research is Multimodal

We’re living in an audio/video-first internet—but your research tools are still text-bound. Parla fills that gap. It’s the first AI research assistant built to understand spoken and visual content as fluently as others understand written pages.

It doesn’t just read—it listens, watches, and delivers insight you can cite.

Try it now at parla.fm and bring the full spectrum of human communication into your research stack.